For a $3,499 USD device that’s designed to replace your phone, laptop, watch, tablet, television, and even your mouse, you bet that Apple’s Vision Pro is absolutely crammed with sensors that track you, your movements, eyesight, gestures, voice commands, and your position in space. As per Apple’s own announcement, the Vision Pro has as many as 14 cameras on the inside and outside, 1 LiDAR scanner, and multiple IR and invisible LED illuminators to help it get a sense of where you are and what you’re doing. Aside from this, the headset also has a dedicated R1 Apple Silicone chip that crunches data from all these sensors (and a few others) to help create the best representation of Apple’s gradual shift towards “Spatial Computing”.

What is “Spatial Computing”?

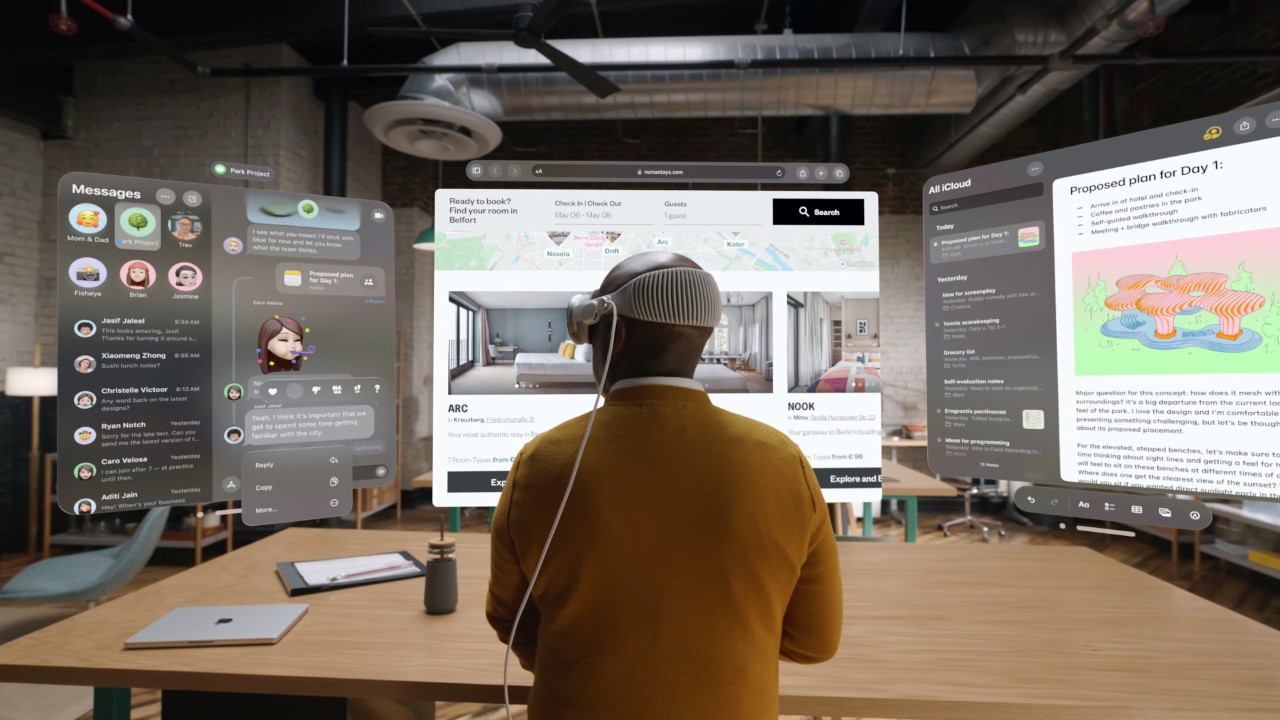

“Vision Pro is a new kind of Computer,” says Tim Cook as he reveals the mixed reality headset for the very first time. “It’s the first Apple product you look through, and not at,” he adds, marking Apple’s shift to Spatial Computing. What’s Spatial Computing, you ask? Well, the desktop was touted as the world’s first Personal Computer, or PC as we so ubiquitously call it today. The laptop shrank the desktop to a portable format, and the phone shrank it further… all the way down to the watch, that put your personal computer on your wrist. Spatial Computing marks Apple’s first shift away from Personal Computing, in the sense that you’re now no longer limited by a display – big or small. “Instead, your surroundings become a canvas,” Tim summarizes, as he hands the stage to VP of Design, Alan Dye.

Spatial Computing marks a new era of computing where the four corners of a traditional display don’t pose any constraints to your working environment. Instead, your real environment becomes your working environment, and just like you’ve got folders, windows, and widgets on a screen, the Vision Pro lets you create folders, windows, and widgets in your 3D space. Dye explains that in Spatial Computing, you don’t have to minimize a window to open a new one. Just simply drag one window to the side and open another one. Apple’s VisionOS turns your room and your visual periphery into an OS, letting you create multiple screens/windows wherever you want, move them around, and resize them. Think Minority Report or Tony Stark’s holographic computer… but with a better, classier interface.

How the M2 and R1 Chips Handle Spatial Computing

At the heart of the Vision Pro headset are two chips that work together to help virtuality and reality combine seamlessly. The Vision Pro is equipped with Apple’s M2 silicon chip to help with computing and handling multitasking, along with a new R1 silicon chip that’s proprietary to the headset, which works with all the sensors inside and outside the headset to track your eyesight, control input, and also help virtual elements exist seamlessly within the real world, doing impressive things like casting shadows on the world around you, changing angles when you move around, or disappearing/fading when someone walks into your frame.

The R1 chip is pretty much Apple’s secret sauce with the Vision Pro. It handles data from every single sensor on the device, simultaneously tracking your environment, your position in it, your hands, and even your eye movements with stunning accuracy. Your eye movements form the basis of how the Vision Pro knows what elements you’re thinking of interacting with, practically turning them into bonafide cursors. As impressive as that is, the R1 also uses your eye data to know what elements of the screen to render, and what not to. Given that you can only focus on a limited area at any given time, the R1 chip knows to render just that part of your visual periphery with crisp clarity, rather than spending resources rendering out the entire scene. It’s a phenomenally clever way to optimize battery use while providing a brilliantly immersive experience. However, that’s not all…

Apple Engineer Reveals the (Scary) Powerful Capabilities of the R1 Chip

A neurotechnology engineer at Apple lifted the veil on exactly how complex and somewhat scary the Vision Pro’s internal tech is. While bound by NDA, Sterling Crispin shared in a tweet how the Vision Pro tracks your eyesight and knows how you’re navigating its interface so flawlessly. Fundamentally, the R1 chip is engineered to be borderline magical at predicting a user’s eye journey and intent. “One of the coolest results involved predicting a user was going to click on something before they actually did […] Your pupil reacts before you click in part because you expect something will happen after you click,” Crispin mentions. “So you can create biofeedback with a user’s brain by monitoring their eye behavior, and redesigning the UI in real time to create more of this anticipatory pupil response.”

“Other tricks to infer cognitive state involved quickly flashing visuals or sounds to a user in ways they may not perceive, and then measuring their reaction to it,” Crispin further explains. “Another patent goes into details about using machine learning and signals from the body and brain to predict how focused, or relaxed you are, or how well you are learning. And then updating virtual environments to enhance those states. So, imagine an adaptive immersive environment that helps you learn, or work, or relax by changing what you’re seeing and hearing in the background.” Here’s a look at Sterling Crispin’s tweet.

I spent 10% of my life contributing to the development of the #VisionPro while I worked at Apple as a Neurotechnology Prototyping Researcher in the Technology Development Group. It’s the longest I’ve ever worked on a single effort. I’m proud and relieved that it’s finally… pic.twitter.com/vCdlmiZ5Vm

— Sterling Crispin 🕊️ (@sterlingcrispin) June 5, 2023

A Broad Look at Every Sensor on the Apple Vision Pro

Sensors dominate the Vision Pro’s spatial computing abilities, and here’s a look at all the sensors Apple highlighted in the keynote, along with a few others that sit under the Vision Pro’s hood. This list isn’t complete, since the Vision Pro isn’t available for a tech teardown, but it includes every sensor mentioned by Apple.

Cameras – The Vision Pro has an estimated 14 cameras that help it capture details inside and outside the headset. Up to 10 cameras (2 main, 4 downward, 2 TrueDepth, and 2 sideways) on the outer part of the headset sense your environment in stereoscopic 3D, while 4 IR cameras inside the headset track your eyes as well as perform 3D scans of your iris, helping the device authenticate the user.

LiDAR Sensor – The purpose of the LiDAR sensor is to use light to measure distances, creating a 3D map of the world around you. It’s used in most self-driving automotive systems, and even on the iPhone’s FaceID system, to scan your face and identify it. On the Vision Pro, the LiDAR sensor sits front and center, right above the nose, capturing a perfect view of the world around you, as well as capturing a 3D model of your face that the headset then uses as an avatar during FaceTime.

IR Camera – The presence of an IR camera on any device plays a key role in being able to do the job of a camera when the camera can’t. IR sensors work in absolute darkness too, giving them a significant edge over conventional cameras. That’s why the headset has 4 IR Cameras on the inside, and an undisclosed number of IR cameras/sensors on the outside to help the device see despite lighting conditions. The IR cameras inside the headset do a remarkable job of eye-tracking as well as of building a 3D scan of your iris, to perform Apple’s secure OpticID authentication system.

Illuminators – While these aren’t sensors, they play a key role in allowing the sensors to do their job perfectly. The Vision Pro headset has 2 IR illuminators on the outside that flash invisible infrared dot grids to help accurately scan a person’s face (very similar to FaceID). On the inside, however, the headset has invisible LED illuminators surrounding each eye that help the IR cameras track eye movement, reactions, and perform detailed scans of your iris. These illuminators play a crucial role in low-light settings, giving the IR cameras data to work with.

Accelerometer & Gyroscope – Although Apple didn’t mention the presence of these in the headset, it’s but obvious that the Vision Pro has multiple accelerometers and gyroscopes to help it track movement and tilt. Like any good headset, the Vision Pro enables tracking with 6 degrees of freedom, being able to detect left, right, forward, backward, upward, and downward movement. The accelerometer helps the headset capture these movements, while the gyroscope helps the headset understand when you’re tilting your head. These sensors, along with the cameras and scanners, give the R1 chip the data it needs to know where you’re standing, moving, and looking.

Microphones – The Vision Pro has an undisclosed number of microphones built into the headset that perform two broad activities – voice detection and spatial audio. Voice commands form a core part of how you interact with the headset, which is why the Vision Pro has microphones that let you perform search queries, summon apps/websites, and talk naturally to Siri. However, the microphones also need to perform an acoustic scan of your room, just the way the cameras need to do a visual scan. They do this so that they can match the sound to the room you’re in, delivering the right amount of reverb, tonal frequencies, etc. Moreover, as you turn your head, sounds still stay in the same place, and the microphones help facilitate that, creating a sonic illusion that allows your ears to believe what your eyes see.

Other Key Components

Aside from the sensors, the Vision Pro is filled with a whole slew of tech components, from screens to battery packs. Here’s a look at what else lies underneath the Vision Pro’s hood.

Displays – Given its name, the Vision Pro obviously focuses heavily on your visual sense… and it does so with some of the most incredible displays ever seen. The Vision Pro has two stamp-sized displays (one for each eye) with each boasting more pixels than a 4K television. This gives the Vision Pro’s main displays a staggering 23 million pixels combined, capable of a 12-millisecond refresh rate (making it roughly 83fps). Meanwhile, the outside of the headset has a display too, which showcases your eyes to people around you. While the quality of this display isn’t known, it is a bent OLED screen with a lenticular film in front of it that creates the impression of a 3D display, so people see depth in your eyes, rather than just a flat image.

Audio Drivers – The headset’s band also has audio drivers built into each temple, firing rich, environmentally-responsive audio into your ears as you wear the headset. Apple mentioned that the Vision Pro has dual audio drivers for each ear, which could possibly indicate quality that rivals the AirPods Max.

Fans – To keep the headset cool, the Vision Pro has an undisclosed number of fans that help maintain optimal temperatures inside the headset. The fans are quiet, yet incredibly powerful, cooling down not one but two chips inside the headset. A grill detail on the bottom helps channel out the hot air.

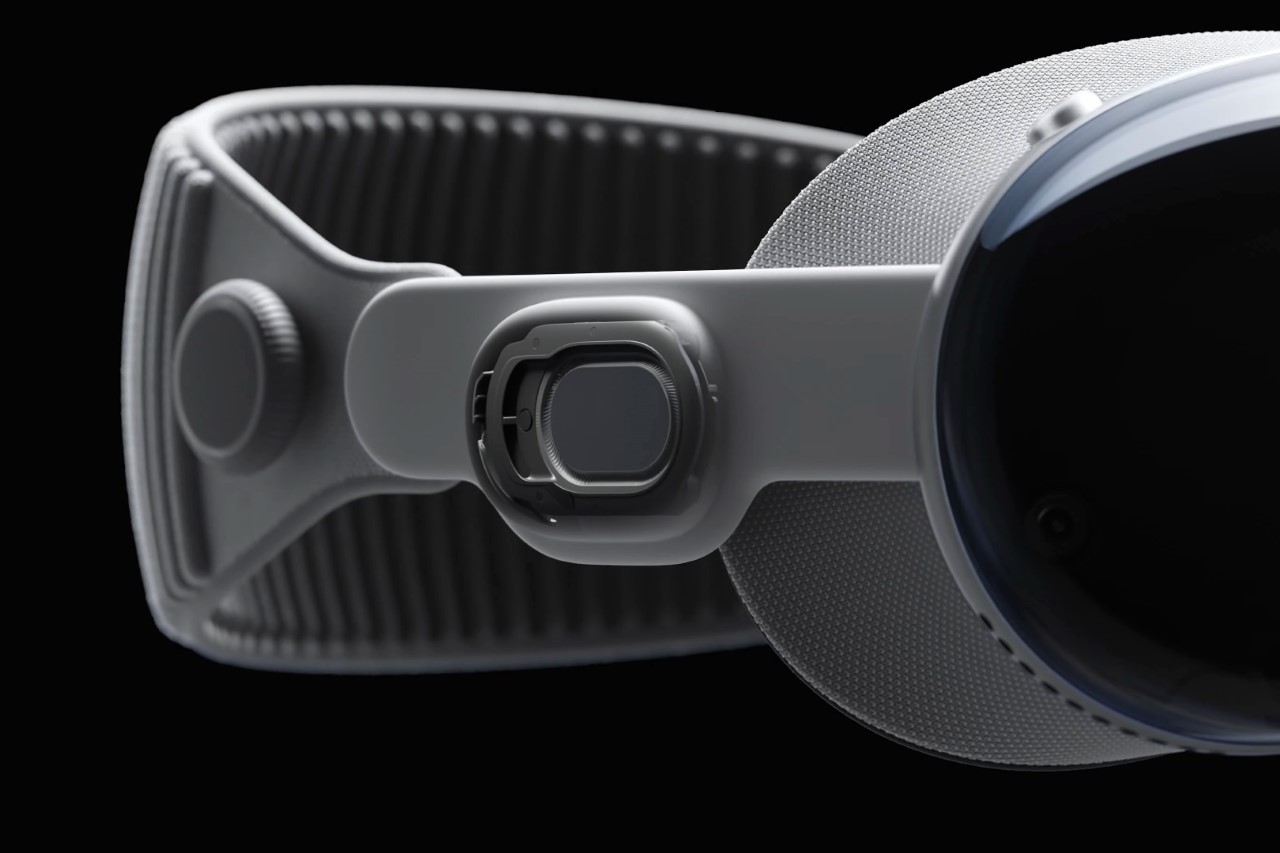

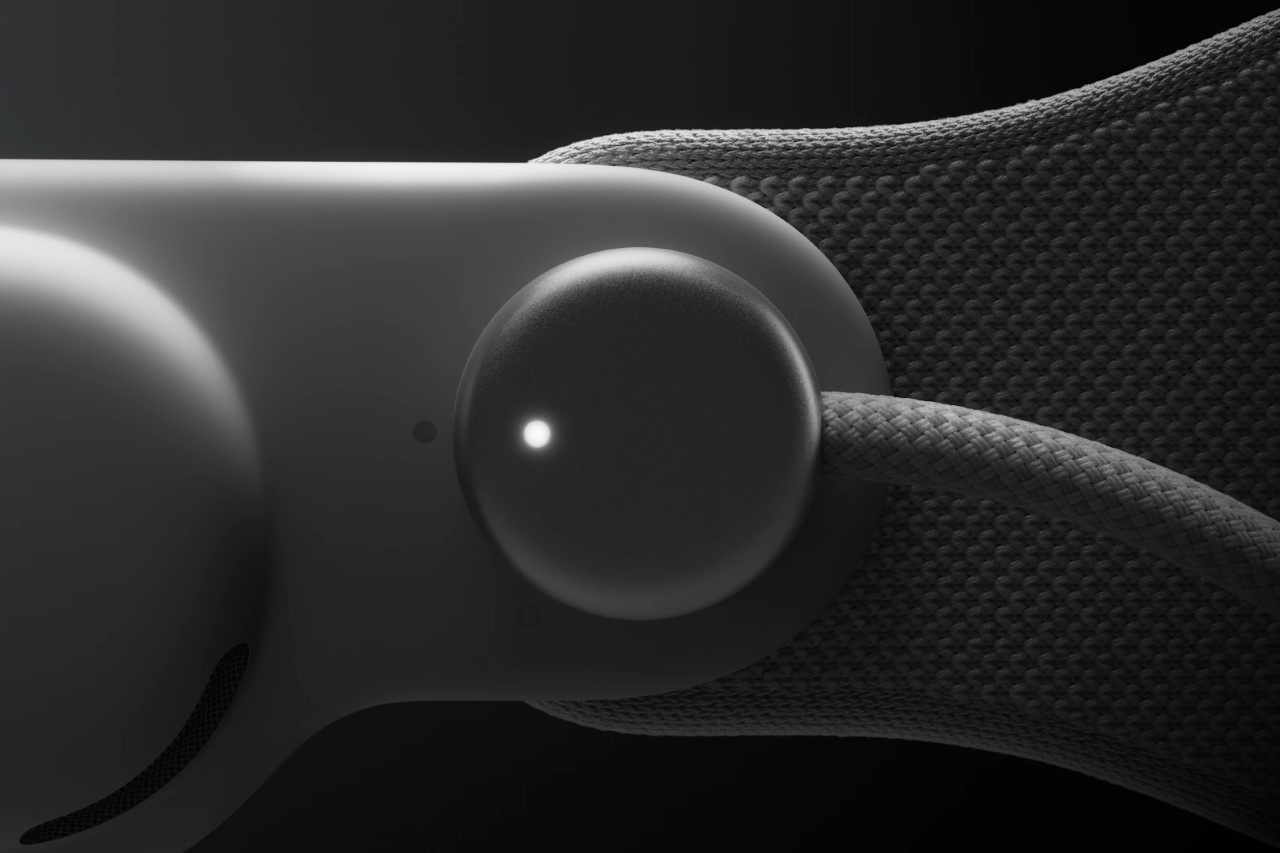

Digital Crown – Borrowing from the Apple Watch, the Vision Pro has a Digital Crown that rotates to summon the home screen, as well as to toggle the immersive environment that drowns out the world around you for a true VR experience.

Shutter Button – The Digital Crown is also accompanied by a shutter button that allows you to capture 3-dimensional photos and videos, that can be viewed within the Vision Pro headset.

Battery – Lastly, the Vision Pro has an independent battery unit that attaches using a proprietary connector to the headset. The reason the headset has a separate battery pack is to help reduce the weight of the headset itself, which already uses metal and glass. Given how heavy batteries are, an independent battery helps distribute the load. Apple hasn’t shared the milliamp-hour capacity of the battery, but they did mention that it gives you 2 hours of usage on a full charge. How the battery charges hasn’t been mentioned either.