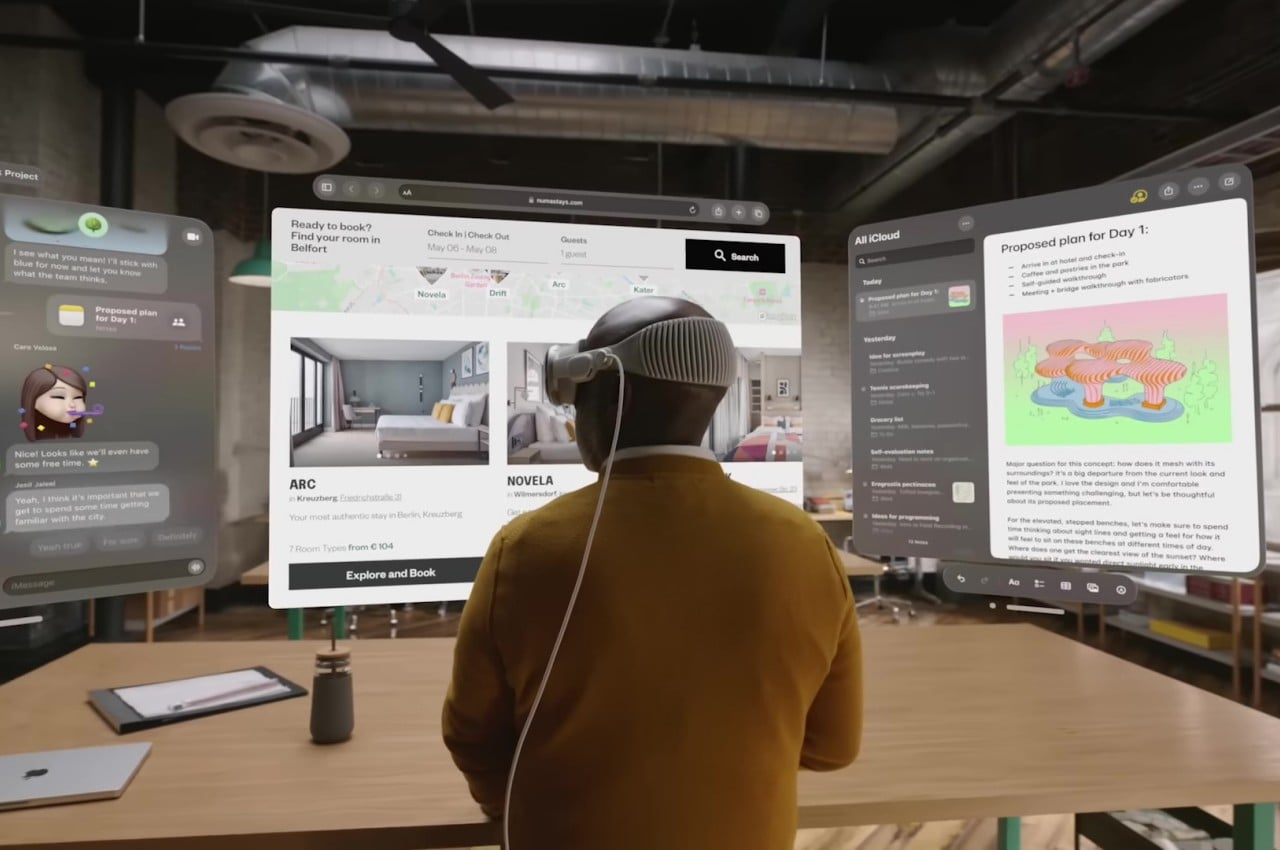

Apple made waves earlier this month when it finally revealed its long-awaited foray into the world of mixed or extended reality. That the company has had its eyes on this market is hardly any secret. In fact, the delayed (at least by market standards) announcement has had some wondering if it was all just wishful thinking. At WWDC 2023, Apple definitely showed the world that it means serious business, perhaps too serious even. The Apple Vision Pro headset itself is already a technological marvel, but in typical Apple fashion, it didn’t dwell too much on the specs that would make many pundits drool. Instead, Apple homed in on how the sleek headset almost literally opens up a whole new world and breaks down the barriers that limited virtual and augmented reality. More than just the expensive hardware, Apple is selling an even more costly new computing experience, one that revolves around the concept of “Spatial Computing.” But what is Spatial Computing, and does it have any significance beyond viewing photos, browsing the Web, and walking around in a virtual environment? As it turns out, it could be a world-changing experience, virtually and really.

Designer: Apple

Making Space: What is Spatial Computing?

Anyone who has been keeping tabs on trends in the modern world will have probably already heard about virtual reality, augmented reality, or even extended reality. Although they sound new to our ears, their origins actually go far, far back, long before Hollywood has even gotten whiff of them. At the same time, however, we’ve been hearing about these technologies so much, especially from certain social media companies, that you can’t help but roll your eyes at “yet another one” coming our way. Given its hype, it’s certainly understandable to be wary of all the promises that Apple has been making, but that would be underselling the concept of what makes Spatial Computing really feel like THE next wave in computing.

It’s impossible to discuss Spatial Computing without touching base with VR and AR, the granddaddies of what is now collectively called “eXtended Reality” or XR. Virtual Reality (VR) is pretty much the best-known of the two, especially because it is easier to implement. Remember that cardboard box with a smartphone inside that you strap to your head? That’s pretty much the most basic example of VR, which practically traps you inside a world full of pixels and intangible objects. Augmented Reality (AR) frees you from that made-up world and instead overlays digital artifacts on real-world objects, much like those Instagram filters everyone seems to love or love to hate. The catch is that these are still intangible virtual objects, and nothing you do in the real world really changes them. Mixed Reality (MR) fixes that and bridges the two so that a physical knob can actually change some virtual configuration or that a virtual switch can toggle a light somewhere in the room.

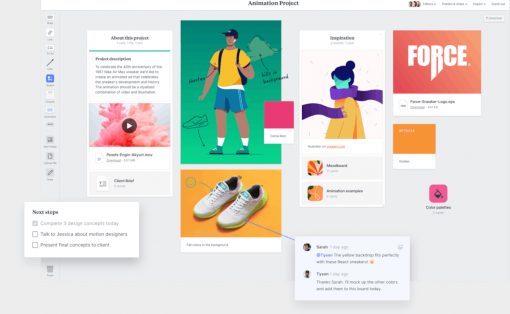

In that sense, Spatial Computing is the culmination of all these technologies but with a very specific focus, which you can discern from its name. In a nutshell, it turns the whole world into your computer, making any available space into an invisible wall you can hang up your apps’ windows. Yes, there will still be windows (with a small “w”) because of how our software is currently designed, but you can hang up as many as you want in the available space you have. Or you can just have one super gigantic video player taking up your vision. The idea also makes use of our brain’s innate ability to associate things with spaces (which is the theory behind the “Memory Palace”) to have us organize our room-sized computer desktop. In a sense, it makes the computer practically invisible, allowing you to directly interact with applications as if they existed physically in front of you because they practically are.

Apple Reality

Of course, you could say that even Microsoft’s HoloLens already did all that. What makes Spatial Computing and Apple’s implementation different is how the virtual and the real affect each other, much like in mixed reality. There is, for example, the direct way we can control the floating applications using nothing but our own bodies, whether it’s with hand gestures or even just the movement of our eyes. This is the fulfillment of all those Minority Report fantasies, except you don’t even need to wear gloves. Even your facial expressions can have an effect on your FaceTime doppelganger, a very useful trick since you won’t have a FaceTime camera available while wearing the Apple Vision Pro.

Apple’s visionOS Spatial Computing, however, is also indirectly affected by your physical environment, and this is where it gets a little magical and literally spatial. According to Apple’s marketing, your virtual windows will cast shadows on floors or walls, and that they’ll be affected by ambient light as well. Of course, you’ll be the only one who sees those effects, but they make the windows and other virtual objects feel more real to you. The Vision Pro will also dim its display to mimic the effect of dimming your lights when you want to watch a movie in the dark. It can even analyze surrounding objects and their textures to mix the audio so that it sounds like it’s really coming from all directions and bouncing off those objects.

The number of technologies to make this seamless experience possible is quite staggering; that’s why Apple didn’t focus too much on the optics, which is often the key selling point of XR headsets. From the sensors to the processors to the AI that interprets all that data, it’s no longer surprising that it took Apple this long to announce the Vision Pro and its Spatial Computing. It is, however, also its biggest gamble, and it could very well ruin the company if it crashes and burns.

Real Design

Spatial Computing is going to be a game-changer, but it’s not a change that will happen overnight, no matter how much Apple wants it to. This is where computing is heading, whether we like it or not, but it’s going to take a lot of time as well. And while it may have “computing” in its name, its ramifications will impact almost all industries, not just entertainment and, well, computing. When Spatial Computing does take off, it will even change the way we design and create things.

Many designers are already using advanced computing tools like 3D modeling software, 3D printers, and even AI to assist their creative process. Spatial Computing will take it up a notch by letting designers have a more hands-on approach to crafting. Along with “digital twins” and other existing tools, it will allow designers and creators to iterate over designs much faster, letting them measure a hundred times and print only once, saving time, resources, and money in the long run.

Spatial Computing also has the potential to change the very design of products themselves, but not in the outlandish way that the Metaverse has been trying to do. In fact, Spatial Computing flips the narrative and gives more importance to physical reality rather than having an expensive, one-of-a-kind NFT sneaker you can’t wear in real life. Spatial Computing highlights the direct interaction between physical and virtual objects, and this could open up a new world of physical products designed to interact with apps or, at the very least, influence them by their presence and composition. It might be limited to what we would consider “computing,” but in the future, computing will pretty much be the way everyone will interact with the world around them, just like how smartphones are today.

Human Nature

As grand as Apple’s Vision might be, it will be facing plenty of challenges before its Spatial Computing can be considered a success, the least of which is the price of the Vision Pro headset itself. We’ve highlighted those Five Reasons Why the Apple Vision Pro Might Fail, and the biggest reason will be the human factor.

Humans are creatures of habit as well as tactile creatures. It took years, maybe even decades, for people to get used to keyboards and mice, and some people struggle with touch screens even today. While Apple’s Spatial Computing promises the familiar controls of existing applications, the way we will interact with them will be completely gesture-based and, therefore, completely new. Add to the fact that even touch screens give something our fingers can feel, and you can already imagine how alien those air hand gestures might be for the first few years.

Apple surely did its due diligence in ergonomic and health studies and designs, but it’s not hard to see how this won’t be the most common way people will do computing, even if you make the Vision Pro dirt cheap. Granted, today’s computers and mobile devices are hardly ergonomic by design, but there have been plenty of solutions developed by now. Spatial Computing is still uncharted territory, even after VR and AR have long blazed a trail. It will definitely take our bodies getting used to before Spatial Computing almost becomes second nature, and Apple will have to stay strong until then.

Final Thoughts

As expected, Apple wasn’t content to just announce just another AR headset to join an uncertain market. The biggest surprise was its version of Spatial Computing, formally marketed as visionOS. Much of what we’ve seen is largely marketing and promises, but this is Apple we’re talking about. It might as well be reality, even if it takes a while to fully happen.

Unlike the entertainment-centric VR or the almost ridiculous Metaverse, Spatial Computing definitely feels like the next evolution of computing that will be coming sooner rather than later. It’s definitely still at an early stage, even if the seeds were planted nearly two decades ago, but it clearly shows potential to become more widely accepted because of its more common and general applications. It also has the potential to change our lives in less direct ways, like changing the way we learn or even design products. It’s not yet clear how long it will take, but it’s not hard to see how Apple’s Vision of the future could very well be our own.